Ring Common Mode Inductor,UU Common Mode Inductor,Vertical Plug-in Common Mode Inductor,Power Line Common Mode Choke Xuzhou Jiuli Electronics Co., Ltd , https://www.xzjiulielectronic.com

With the rapid advancement of the Internet, especially in recent years, the widespread adoption of social networks, the Internet of Things (IoT), cloud computing, and various sensors has led to a surge in unstructured data. This kind of data is characterized by its massive volume, diversity, and strong time sensitivity. As a result, the need for efficient data storage and analysis technologies has become increasingly critical, giving rise to the concept of big data. The challenge of acquiring, aggregating, and analyzing such large volumes of data in real time has sparked significant interest across industries. In this article, we will explore the definition, characteristics, and key issues related to big data, as well as the challenges it faces.

"Big data" has become a buzzword in the IT industry in recent years. Its applications are expanding rapidly across various sectors. For example, during the 2014 Two Sessions in China, big data was widely discussed and used for analysis. But what exactly is big data? How can we understand this concept? Let's take a closer look.

**The Concept of Big Data:**

Big data refers to extremely large sets of data that cannot be effectively managed or processed using traditional software tools within a reasonable timeframe. It represents a shift from sampling-based approaches to analyzing all available data. According to *Big Data: A Revolution That Will Transform How We Live, Work, and Think* by Victor Mayer-Schönberg and Kenneth Cukier, big data emphasizes the use of full datasets rather than random samples. The four core characteristics—Volume, Velocity, Variety, and Value—define the essence of big data.

**The Evolution of the Big Data Concept:**

The term "big data" first appeared in the context of Apache Nutch, an open-source project that dealt with large-scale web indexing. With the introduction of Google’s MapReduce and Google File System (GFS), big data evolved beyond just volume to include processing speed. In 1980, futurist Alvin Toffler described big data as "the third wave" in his book *The Third Wave*. Since 2009, the phrase has gained widespread popularity in the tech industry. According to the US Internet Data Center, internet data doubles every two years, with over 90% of the world’s data generated in recent years. Beyond human-generated content, data also comes from industrial equipment, vehicles, and digital sensors measuring environmental changes.

**Structure of the Big Data Concept:**

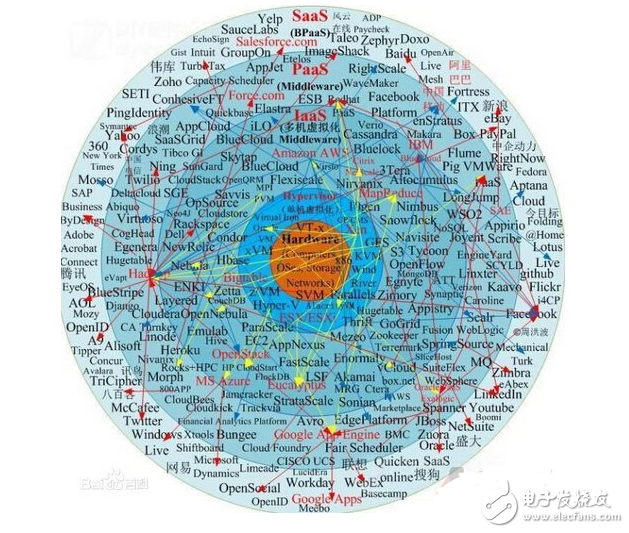

Big data reflects the current state of the Internet and is not just a trend but a powerful tool. Under the backdrop of cloud computing and technological innovation, previously hard-to-collect data is now more accessible. Through continuous innovation across industries, big data is expected to unlock greater value for society. To fully grasp big data, it’s essential to break it down into three levels: theory, technology, and practice.

At the theoretical level, big data is defined and analyzed for its value, trends, and implications, especially regarding privacy. At the technological level, it involves data collection, processing, and storage through cloud computing, distributed systems, and sensing technologies. Finally, at the practical level, big data is applied in areas like government, enterprise, and individual use, showcasing its transformative potential.

**Key Features of Big Data:**

Compared to traditional data systems, big data is characterized by high volume, complex queries, and fast processing. The four V’s—Volume, Velocity, Variety, and Value—capture its essence. Volume refers to the sheer scale of data, ranging from terabytes to petabytes. Variety indicates the different types of data, such as logs, videos, images, and sensor data. Velocity refers to the speed at which data is generated and processed, often in real time. Lastly, Value highlights the potential for extracting meaningful insights from data when analyzed correctly.

**Applications of Big Data:**

Big data spans several domains, including technology, engineering, science, and application. While big data technology and applications are widely discussed, engineering and scientific aspects are often overlooked. Big data engineering involves the planning, construction, and management of large-scale data systems. Big data science focuses on understanding patterns and relationships between data and human activities. Sources of big data include IoT devices, mobile networks, smartphones, and global sensors.

Examples of big data sources include web logs, RFID tags, social media, search engine indexes, call records, scientific research data, medical records, video archives, and e-commerce transactions.

**The Role of Big Data:**

For businesses, big data plays a crucial role in data analysis and secondary development. By analyzing big data, companies can uncover hidden insights, improve customer targeting, and create personalized marketing strategies. Secondary data development helps tailor solutions to meet customer needs, making data-driven decisions a strategic advantage. These practices are not accidental but are driven by those leading the data revolution.

In conclusion, big data represents a major shift in how we process and understand information. It not only marks technological progress but also encourages deeper exploration into new frontiers. Understanding the three key characteristics—large scale, fast processing, and diverse data—is essential for effective data management and system optimization.